The insatiable computational appetite of artificial intelligence has created a chasm between the data center industry’s ambitions and the physical limitations of the world’s electrical grids. The race is no longer just about processing speed or storage capacity; it is a frantic, high-stakes sprint for megawatts. This new reality is forcing a complete reinvention of how data centers are planned, built, and powered, shifting the industry’s focus from long-term efficiency to the immediate, critical need for operational energy. What was once a predictable development cycle has been replaced by a dynamic and sometimes unconventional scramble, fundamentally reshaping the landscape for all stakeholders.

The AI Tsunami: A Paradigm Shift in Power Demand

The rapid ascent of artificial intelligence is not merely an evolution for the data center industry; it is a seismic event. The colossal energy demands of training and running AI models have transformed data centers from significant power consumers into foundational pillars of the modern electrical load, rivaling entire cities in their consumption. This surge has forged a new ecosystem where traditional lines are blurring. Hyperscalers, utility providers, and hardware manufacturers like NVIDIA are no longer operating in sequential partnership but in a deeply intertwined, often frantic, collaboration.

This new environment is driven by a single, non-negotiable imperative: securing massive amounts of power on radically compressed timelines. The value of AI infrastructure is realized only when it is operational, and any delay in power delivery translates directly into lost revenue and competitive disadvantage. Consequently, a new consensus has emerged across the industry, acknowledging that the slow, bureaucratic processes of the past are obsolete. The focus has shifted entirely toward innovative strategies that can bring gigawatts online in months, not years, creating unprecedented challenges and opportunities for every player in the value chain.

The Emerging Blueprint for Unpreprecedented Growth

Redefining Development: Strategies to Bypass the Gridlock

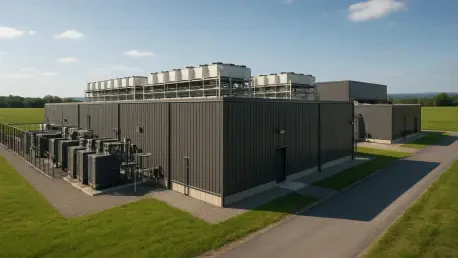

To circumvent the multi-year delays associated with traditional grid interconnection, data center developers are deploying a range of aggressive and innovative strategies. One of the most effective tactics is co-locating new facilities directly adjacent to existing or planned power generation sources. By sharing a point of interconnection with a power plant, operators can shave years off their project timelines, effectively piggybacking on established infrastructure. This approach sidesteps the lengthy and complex process of securing a new, high-capacity connection to the transmission network.

Another critical strategy gaining momentum is the deployment of behind-the-meter (BTM) power generation. Developers are increasingly building their own power plants, often fueled by natural gas, on-site to energize their facilities independently of the grid. This allows construction and operations to commence almost immediately, with a connection to the broader grid established later. To further accelerate deployment, some are even exploring radical design simplifications, such as eliminating complex components like uninterruptible power supply (UPS) systems. Looking further ahead, forward-thinking operators are investigating advanced technologies, including the acquisition of small modular reactors (SMRs), to secure dense, long-term power for future campuses.

By the Numbers: Quantifying the AI Power Deficit

The gap between available power and projected AI-driven demand is staggering, and nowhere is this more evident than in Texas. The state’s grid, managed by the Electric Reliability Council of Texas (ERCOT), can supply up to 85 gigawatts (GW) at peak times. However, a colossal 230 GW of planned capacity, predominantly from data centers and other large industrial users, currently sits in the interconnection queue. This backlog illustrates a fundamental crisis: the existing grid infrastructure and regulatory frameworks were never designed to accommodate such an explosive and concentrated surge in demand.

This power deficit is amplified by powerful economic drivers. The profitability of modern AI data centers is directly tied to the timely deployment of high-value hardware, particularly GPUs. A delay in securing power means that expensive, pre-ordered processors cannot be installed, forcing the operator to forfeit the hardware to another customer and lose immediate revenue-generating capability. This high-stakes dynamic has made “speed to power” the central variable in project finance and profitability, justifying massive upfront investments in private power generation and other creative solutions to minimize any delay between construction completion and operational launch.

Gridlock and Green Goals: The Core Challenges Ahead

The primary obstacle hamstringing the industry’s growth is the slow and overburdened process of grid interconnection. In many regions, the timeline for securing approval and completing the necessary infrastructure upgrades can stretch from five to ten years. This gridlock is a product of aging infrastructure, complex regulatory hurdles, and a sequential queueing system that cannot handle the current volume of requests. For an industry that measures hardware cycles in months, such protracted timelines are untenable and represent the single greatest threat to meeting AI-driven demand.

This urgent need for speed is creating a complex tension with long-term sustainability objectives. While many leading data center operators have committed to ambitious decarbonization goals, the immediate requirement for reliable, large-scale power is forcing a pragmatic reliance on fossil fuels. Natural gas power plants, for instance, can be built relatively quickly and are fueled by an abundant resource, making them an essential stopgap solution. This creates an unavoidable trade-off, where the short-term necessity of powering AI infrastructure often defers or complicates the industry’s broader push toward a carbon-neutral future.

Rewriting the Rules: How Policy is Forging a New Path

In response to the growing energy crisis, policymakers are beginning to rewrite the regulatory playbook to facilitate faster deployment. Texas has emerged as a model for reform with the passage of landmark legislation like Senate Bill 6, which directly addresses the grid interconnection bottleneck. These new policies are designed to break the logjam that has left hundreds of gigawatts of projects waiting for approval, creating a more agile and responsive regulatory environment.

The reforms introduce several key changes aimed at accelerating the process. One of the most significant is the shift from a first-come, first-served queue to a batch processing system, allowing groups of qualified projects to move forward simultaneously. This dramatically shortens the waiting period for well-prepared applicants. Furthermore, the legislation overhauls how the costs of grid upgrades are allocated, requiring new high-demand projects like data centers to bear a more direct and equitable share of the infrastructure expenses they necessitate. This not only speeds up the funding and construction of new transmission lines but also protects residential consumers from shouldering the financial burden of industrial growth.

Powering Tomorrow: The Future of Data Center Energy

Looking ahead, the relationship between data centers and the power grid is poised for a fundamental transformation. The current trend toward on-site, behind-the-meter generation is likely to become standard practice for large-scale deployments, with data center campuses functioning as self-contained energy islands that can operate independently or sell excess capacity back to the grid. This distributed model promises greater resilience and faster deployment times, reducing reliance on strained public infrastructure.

The long-term energy landscape will also be shaped by emerging technologies. The commercial viability of small modular reactors could be a game-changer, offering a source of clean, dense, and carbon-free power that is perfectly suited to the demands of hyperscale AI. As these technologies mature, the industry will face the ongoing challenge of reconciling its exponential growth with its environmental commitments. The future will likely involve a hybrid approach, combining on-site generation, advanced nuclear, and strategic grid integration to build a power ecosystem that is both robust and sustainable.

The Verdict: Speed as the New Competitive Mandate

The evidence presented a clear conclusion: the AI revolution had fundamentally reordered the priorities of the data center industry. Success was no longer defined by traditional metrics like cost per square foot or fiber connectivity but by a single, overriding factor: the velocity at which megawatts could be delivered to a server rack. This imperative for “speed to power” had become the new competitive mandate, eclipsing nearly every other consideration.

In response to this paradigm shift, a new blueprint for growth was actively being implemented. It was a multifaceted strategy built on regulatory reform, innovative siting, on-site power generation, and a pragmatic re-evaluation of sustainability timelines. While the race for AI power was fraught with immense logistical, financial, and environmental challenges, the industry’s determined pivot toward this new model demonstrated a clear path forward. The operators who successfully mastered this new reality were the ones positioned to power the next era of technological innovation.