Imagine a global financial institution facing a critical decision: selecting a data center to host its transaction systems, where even a minute of downtime could cost millions in lost revenue and eroded trust. In an era where digital infrastructure underpins nearly every industry, the stakes for choosing the right facility have never been higher. Data centers are the backbone of modern IT operations, and classification systems provide the roadmap for navigating their complex landscape. This review dives into the frameworks that define and evaluate these vital facilities, assessing their features, performance metrics, and relevance to business needs in today’s tech-driven environment.

Core Frameworks Driving Classification

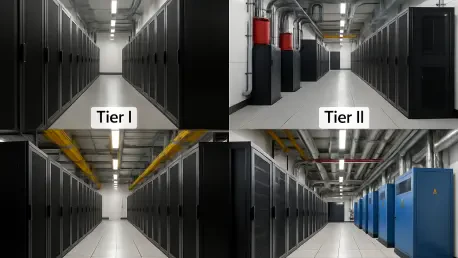

The foundation of data center evaluation lies in structured systems that measure reliability, capacity, and operational focus. Among these, the Uptime Institute’s Tier system stands as a benchmark for assessing a facility’s ability to maintain operations under stress. Spanning from Tier I, which offers basic capacity with limited redundancy, to Tier IV, which ensures near-perfect uptime through multiple independent systems, this framework helps businesses prioritize resilience. Its rigorous certification process adds a layer of trust, distinguishing verified facilities from those making unverified claims.

Beyond reliability, power capacity serves as a key indicator of a data center’s scale. Measured in megawatts, this metric reflects the ability to support extensive IT equipment, crucial for large-scale operations like cloud computing. However, while a 100-megawatt facility might suggest immense capability, it reveals little about efficiency or fault tolerance, underscoring the need for a broader evaluation approach. This limitation pushes stakeholders to look at complementary metrics to form a complete picture.

Another dimension of classification involves service models, such as private versus colocation setups. Private data centers cater to single organizations with specific, high-demand needs, often at significant cost. In contrast, colocation facilities offer shared space, providing cost-effective flexibility for businesses seeking to scale or diversify geographically. This distinction shapes strategic decisions, aligning infrastructure with budgetary and operational goals.

Performance Metrics and Emerging Criteria

As data center demands evolve, so do the criteria for evaluating their performance. Sustainability has emerged as a critical focus, with certifications like LEED and ISO 50001 signaling a commitment to energy efficiency. These standards assess overall facility design, yet often fall short of addressing workload-specific impacts or renewable energy integration. For environmentally conscious enterprises, this gap highlights the importance of digging deeper into a center’s green claims.

Specialization also plays a growing role in classification, with facilities tailored for niche applications. Hyperscale centers, built for massive cloud providers, contrast with modular designs that offer scalable, localized solutions for smaller or edge-computing needs. These variations allow businesses to match infrastructure to specific use cases, whether supporting vast data lakes or enabling low-latency applications at the network edge.

The push for verified performance metrics further shapes the landscape. Unverified labels, such as the nonexistent “Tier 5,” can mislead organizations about a facility’s true capabilities. This challenge emphasizes the value of formal certifications and transparent reporting, ensuring that performance claims align with reality. As complexity grows, multi-criteria evaluations are becoming indispensable for informed decision-making.

Practical Applications Across Industries

Classification systems directly influence how industries leverage data centers to meet unique demands. In sectors like healthcare, where patient data systems require uninterrupted access, high-tier facilities with certified uptime guarantees are non-negotiable. Financial institutions similarly rely on top-tier resilience to safeguard real-time transactions, illustrating how classifications translate into operational security.

For startups and small enterprises, colocation models often provide an accessible entry point. By sharing infrastructure, these businesses gain access to robust facilities without the burden of ownership costs, enabling rapid scaling. This flexibility showcases how classification guides resource allocation, balancing capability with affordability in competitive markets.

Unique implementations, such as micro data centers, also demonstrate the adaptability of classification frameworks. Deployed in remote or urban settings to support edge computing, these compact solutions cater to localized needs, reducing latency for IoT devices or retail analytics. Such applications reveal the practical diversity of classifications, ensuring relevance across a spectrum of technological challenges.

Challenges in Standardizing Evaluations

Despite their utility, data center classification systems face significant hurdles in achieving consistency. Technical discrepancies, such as the mismatch between broad sustainability certifications and specific server efficiency, create ambiguity for tenants seeking precise environmental impact data. This disconnect can complicate efforts to align infrastructure with green objectives.

Market-driven issues further muddy the waters, as unverified or exaggerated claims risk distorting perceptions of a facility’s quality. Businesses may inadvertently select underperforming centers based on misleading marketing, highlighting the urgent need for standardized, transparent frameworks. Ongoing industry efforts aim to address these gaps, but progress remains uneven.

Regulatory pressures add another layer of complexity, as varying regional standards for energy use and emissions influence how classifications are applied. Harmonizing these diverse requirements poses a persistent challenge, yet it also drives innovation in creating more comprehensive evaluation tools. Overcoming these obstacles is critical to maintaining trust in classification systems.

Verdict and Next Steps

Looking back, this exploration of data center classification systems revealed their indispensable role in aligning infrastructure with business imperatives. From the Uptime Institute’s Tier framework to power capacity metrics and sustainability standards, these tools offered a structured lens for evaluating performance and suitability. Their ability to guide industries through complex decisions stood out as a defining strength, even as challenges like unverified claims and standardization gaps persisted.

Moving forward, stakeholders should prioritize verified certifications to ensure reliability and transparency in their selections. Advocating for enhanced sustainability metrics that address workload-specific efficiency will be crucial in meeting environmental goals. Additionally, supporting industry initiatives to standardize frameworks can help mitigate market distortions, fostering a more trustworthy ecosystem. As data center needs continue to diversify, embracing multi-criteria evaluations will empower businesses to navigate this dynamic landscape with confidence.