In an era where artificial intelligence (AI) is redefining industries and reshaping daily life, the invisible force of networking stands as a critical enabler of this technological revolution, ensuring that every breakthrough is supported by robust infrastructure. Behind every AI advancement—whether it’s a sophisticated language model or a complex predictive algorithm—lies a vast, intricate web of infrastructure that ensures seamless data flow and computational power. Networking, often overlooked in the spotlight of AI innovation, is emerging as the backbone that not only supports current advancements but also charts the path for future scalability and efficiency. Insights from leading industry events, such as Meta’s recent @Scale: Networking gathering, reveal how network engineers are tackling unprecedented challenges to build systems capable of handling massive AI workloads. This exploration uncovers the pivotal role of networking in driving AI forward, from staggering investments in infrastructure to adapting to diverse computational demands, positioning networks as the foundation of tomorrow’s AI landscape.

Unprecedented Investments Fueling AI Growth

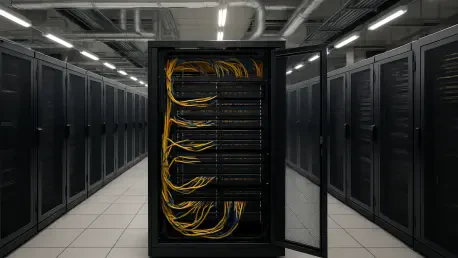

The scale of financial commitment to AI infrastructure is nothing short of staggering, with industry giants pouring billions into constructing colossal computing clusters to meet soaring demand. Networking plays a central role in this endeavor, connecting gigawatt-scale setups akin to Meta’s Prometheus and Hyperion clusters, which rely on extensive transoceanic fiber cable systems for global reach. Temporary structures are often deployed to address immediate capacity needs, highlighting the urgency to expand rapidly. This investment trend transcends individual companies, reflecting a collective push to make AI accessible worldwide through robust connectivity. Networks are not merely conduits for data; they are the scaffolding that supports these ambitious projects, ensuring that the immense resources allocated to AI development translate into tangible, scalable solutions. The focus on building such extensive infrastructure underscores how networking is integral to turning AI’s potential into reality, enabling systems that can handle the computational intensity required for cutting-edge applications.

Beyond the sheer financial outlay, the strategic importance of networking in these investments lies in its ability to integrate disparate systems into a cohesive whole. The challenge is not just building bigger clusters but ensuring they operate efficiently across vast distances and diverse environments. Networking solutions must bridge gaps between clean energy-powered data centers and remote user bases, maintaining low latency and high throughput under extreme pressure. This requires innovative approaches to cable routing, data center design, and energy management, all of which fall under the purview of network engineering. As companies race to outpace each other in AI capabilities, the reliability and reach of their networks often determine success. The emphasis on global connectivity through networking highlights a broader industry realization: without a solid infrastructure foundation, even the most advanced AI models risk becoming isolated, underutilized assets in an increasingly interconnected world.

Adapting Networks to Diverse AI Workloads

AI workloads have evolved dramatically, moving far beyond traditional training models to encompass a wide spectrum of tasks like distributed inference, reinforcement learning, and specialized architectures such as mixture-of-experts models. This diversity places unique demands on networking, requiring systems that can dynamically adjust to varying bandwidth and performance needs with precision. Engineers are tasked with designing networks that support everything from massive GPU clusters to smaller, distributed setups without sacrificing efficiency or speed. The ability to adapt to these shifting requirements is not just a technical challenge but a strategic necessity, ensuring that AI systems remain versatile enough to handle emerging use cases. Networking’s role in this context is to provide a flexible, responsive framework that keeps pace with AI’s rapid evolution, guaranteeing that infrastructure does not become a bottleneck for innovation.

Moreover, the complexity of modern AI workloads necessitates a rethinking of how networks prioritize and allocate resources. Unlike earlier, more uniform tasks, today’s AI applications often run concurrently, each with distinct latency and throughput needs that can strain conventional setups. Networking must evolve to offer granular control over data flows, ensuring that critical processes receive priority while maintaining overall system stability. This adaptability is particularly crucial as AI moves into real-time applications, where delays can have significant consequences. By developing architectures that can scale up or down based on workload demands, networking is laying the groundwork for AI systems that are not only powerful but also agile. This shift toward dynamic, workload-aware networks represents a fundamental change in how infrastructure supports AI, positioning networking as a key driver of future technological advancements.

Transforming Networks into Abstraction Layers

Envision a network so sophisticated that it masks the underlying complexity of vast, heterogeneous infrastructure, presenting it to AI models as a single, unified computing resource—this concept of “the network as the computer” is revolutionizing AI infrastructure. Achieving this seamless abstraction demands overcoming significant obstacles, such as disparities in bandwidth, hardware variations across accelerators, and differences in network fabrics. It requires deep expertise in areas like routing protocols, congestion management, and GPU stack optimization to ensure smooth operation. Networking, in this paradigm, transcends its traditional role as a data pipeline, becoming an essential layer that simplifies the chaos of physical systems. This transformative approach allows AI developers to focus on model performance rather than infrastructure limitations, marking a significant shift in how networks underpin technological progress.

This abstraction also fosters greater scalability, enabling AI systems to expand without being constrained by the intricacies of underlying hardware. Networks must integrate diverse components—ranging from network interface cards to high-speed interconnects—into a cohesive system that operates as one. The challenge lies in maintaining consistency across varying distances and equipment types, a task that demands a full-stack approach to design and implementation. By abstracting these complexities, networking empowers AI to operate at unprecedented scales, supporting clusters that span continents while delivering performance akin to localized systems. This capability is not just a technical achievement; it represents a strategic advantage for companies aiming to deploy AI globally. The evolution of networks into abstraction layers is redefining infrastructure, making it a silent yet indispensable partner in AI’s ongoing transformation of industries and societies.

Prioritizing Reliability and Forward-Looking Innovation

As AI infrastructure reaches new heights of scale and complexity, the reliability of networking emerges as a non-negotiable priority, with systems required to deliver unwavering performance even under intense strain. Failures at this level can cascade, disrupting critical AI operations with far-reaching consequences, making rapid detection and mitigation essential. Network engineers are focused on building robust frameworks that anticipate and address issues before they escalate, ensuring uptime for workloads that power everything from research to real-time applications. Reliability is not just about maintaining the status quo; it’s about creating trust in AI systems that increasingly underpin global economies and essential services. Networking’s role in guaranteeing this dependability positions it as a linchpin in the broader AI ecosystem, where even minor disruptions can have outsized impacts on progress and adoption.

Equally critical is the industry’s commitment to continuous innovation, ensuring that networks remain agile in the face of AI’s unpredictable advancements. This involves integrating principles of high-performance computing with open, scalable designs that allow for rapid adaptation to new models and hardware. Networking cannot afford to be static; it must offer flexibility, enabling systems to evolve alongside emerging technologies without requiring complete overhauls. This forward-thinking approach is evident in efforts to anticipate shifts in AI demands over the coming years, from increased computational needs to novel workload types. By balancing reliability with innovation, networking is crafting a foundation for AI that is both stable and future-ready. The dual emphasis on dependability and adaptability highlights how networks are not just supporting AI’s current trajectory but actively shaping its potential for years to come.

Building Tomorrow’s AI Through Networking

Reflecting on the insights shared at events like Meta’s @Scale: Networking gathering, it’s evident that networking has been pivotal in addressing the monumental challenges of AI infrastructure in recent times. The immense financial commitments to build global clusters, the adaptation to increasingly complex workloads, and the transformation of networks into seamless abstraction layers all point to a unified industry vision. Reliability has been rigorously prioritized, ensuring systems can withstand the pressures of scale, while innovation paves the way for adaptable designs that anticipate future needs. Moving forward, the focus should shift to deepening collaboration across tech sectors to refine these networked systems further. Exploring open standards and shared frameworks could accelerate progress, ensuring that AI infrastructure remains inclusive and scalable. As the industry advances, investing in next-generation networking talent and tools will be crucial to sustain this momentum, solidifying networks as the cornerstone of AI’s boundless possibilities.