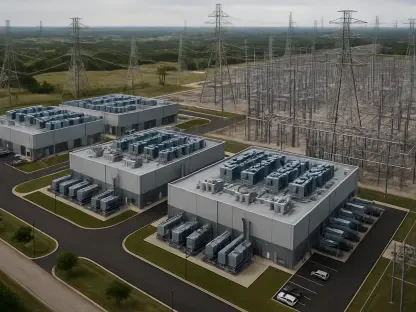

The global technology landscape is on the precipice of a monumental transformation, with a multi-year expansion cycle poised to funnel an astonishing $1.7 trillion into worldwide data center capital expenditures by the close of the decade. This surge in spending, driven by the insatiable demands of artificial intelligence, represents more than just an incremental increase; it signals a fundamental reshaping of digital infrastructure on a scale never before witnessed. This aggressive growth is not a monolithic movement but a complex interplay of investment from established technology behemoths, emerging specialized players, and new national initiatives all vying for a stake in the future of computing. The sheer velocity of this capital deployment is rapidly accelerating, pulling future milestones into the present and compelling every sector to reevaluate its technological roadmap in the face of an AI-powered world.

Hyperscaler Dominance in the AI Arms Race

The Unprecedented Investment by Tech Giants

The engine powering this financial explosion is overwhelmingly concentrated within a small group of elite U.S. hyperscalers, namely Amazon, Google, and Meta. These titans of technology are projected to be responsible for approximately half of all global data center capex by 2030, a staggering concentration of resources that underscores their central role in the AI revolution. Having already embarked on a new phase of infrastructure expansion with nearly $600 billion in combined capital expenditures committed at the start of this year, their investment momentum shows no signs of slowing. Despite external scrutiny over the immediate financial returns of their AI ventures, these companies are leveraging their immense cash reserves and maintaining a long-term strategic focus on securing market share. This unwavering commitment reflects a deep-seated belief that dominance in the AI space will define the next era of technological leadership, making the current level of investment not just an expenditure but a critical down payment on future relevance and profitability.

This acceleration in spending has dramatically outpaced earlier forecasts, bringing a future once thought to be several years away to our doorstep. The milestone of approaching the $1 trillion mark in capex, once a distant projection, is now anticipated as early as this year. This rapid revision of timelines is directly attributable to the escalating complexity and size of emerging AI models, which demand a commensurately more robust and powerful infrastructure for both training and inference. To sustain this breakneck pace of growth without succumbing to operational or financial strain, hyperscalers are pioneering innovative strategies. They are increasingly developing vertically integrated technology stacks, including custom networking solutions, to optimize performance and reduce reliance on third-party vendors. Concurrently, they are employing sophisticated financial instruments, such as external financing, to optimize cash flow and more effectively manage the immense operational costs associated with building and maintaining these next-generation data centers, ensuring their expansion remains both aggressive and sustainable.

The Ripple Effect on Infrastructure

The immense capital being poured into AI extends far beyond the core accelerated compute servers, creating a powerful ripple effect across the entire data center ecosystem. The deployment of ever-larger and more intricate AI clusters acts as a catalyst, escalating the demand for a comprehensive suite of supporting infrastructure. This includes the need for ultra-high-performance networking fabrics to facilitate rapid data movement between thousands of processors, advanced storage solutions capable of handling petabytes of training data, and significant inference capacity to serve AI models in real-time. Furthermore, the immense power consumption and heat generation of these systems necessitate sophisticated and highly efficient power and cooling infrastructure. This phenomenon has been aptly described as AI being “the tide that lifts all boats,” a force that invigorates and drives growth in every complementary segment of the data center market. The growth is not isolated to a single component but represents a holistic and interconnected expansion of the entire digital foundation.

This comprehensive build-out is fundamentally altering the composition of data center spending. Projections now indicate that accelerated servers, specifically designed for intensive workloads like AI model training and inference, could constitute as much as two-thirds of the total data center infrastructure expenditure by the end of the decade. This marks a profound shift away from general-purpose computing and toward specialized, high-performance hardware tailored for artificial intelligence. The focus on accelerated computing highlights the industry’s recognition that traditional server architectures are insufficient to meet the computational demands of modern AI. As organizations continue to push the boundaries of what is possible with larger and more complex models, the investment in this specialized hardware will only intensify, cementing its position as the central and most significant component of the modern, AI-driven data center and reshaping supply chains and hardware innovation for years to come.

The Enterprise Conundrum and Strategic Insights

A Cautious Approach From the Enterprise Sector

In stark contrast to the aggressive, multi-billion-dollar expansion projects undertaken by hyperscalers, the broader enterprise sector has adopted a much more restrained and cautious posture toward data center investment. This hesitation stems from a confluence of challenging macroeconomic and geopolitical factors that create a climate of uncertainty. Unfavorable tariff situations in key markets can significantly increase the cost of hardware and components, while restrictive monetary policies make financing large capital projects more expensive and difficult to secure. Beyond these external pressures, there remains a persistent and fundamental uncertainty among many enterprises about the tangible, measurable returns on investment that AI can deliver for their specific business operations. Unlike hyperscalers, who can operate on longer investment horizons and absorb the costs of experimentation, most enterprises require a clearer line of sight to profitability before committing to the significant upfront costs associated with building out AI-ready infrastructure.

This disparity in investment philosophy is rooted in the different risk profiles and resource availability between the two groups. Enterprises, even large ones, typically lack the vast financial reserves and dedicated research divisions that allow hyperscalers to pursue ambitious, long-term AI projects with uncertain immediate payoffs. The scale of investment required to build and operate competitive AI infrastructure, from specialized servers to advanced cooling systems, represents a formidable barrier to entry. For many companies, the potential benefits of custom AI solutions are still abstract, making it difficult to justify such a substantial capital outlay to stakeholders and boards. This leads many to adopt a more conservative “wait-and-see” strategy, preferring to rely on the cloud services offered by hyperscalers while they carefully evaluate specific use cases and wait for the technology and its associated costs to mature, ensuring any future investment is both targeted and impactful.

Learning From the Leaders

Despite their more constrained investment capacity, enterprises are not without strategic options and can derive significant value by closely observing the spending trends of their hyperscaler counterparts. The capital allocation decisions made by these market leaders serve as a powerful leading indicator for the future direction of cloud services and digital infrastructure. By tracking precisely where and in what technologies hyperscalers are investing their capital, enterprises can gain crucial insights into where future service enhancements will emerge and, perhaps more importantly, where potential capacity bottlenecks may develop. For instance, a heavy investment in a specific geographic region could signal an upcoming surge in service availability and performance there, while a focus on a particular type of storage technology might indicate a future industry standard. This intelligence is invaluable for businesses in the process of formulating or refining their own multi-cloud strategies and helps them create more realistic and effective execution timelines.

The practical application of this observational strategy allows businesses to move from a reactive to a proactive stance in their cloud and infrastructure planning. For example, if an enterprise notices that its primary cloud providers are heavily investing in high-performance networking infrastructure in a key operational region, it could infer an impending increase in demand for high-bandwidth applications. Armed with this foresight, the business could proactively negotiate for better terms on network services, secure necessary capacity before it becomes scarce and expensive, or adjust its own application deployment plans to align with the upgraded infrastructure. This level of strategic intelligence, gleaned from the actions of industry giants, empowers enterprises to navigate the complex cloud landscape more effectively. It enables them to anticipate market shifts, avoid being caught in a resource crunch, and ultimately position themselves to leverage the latest technological advancements without shouldering the full risk of pioneering investment, thereby gaining a significant competitive advantage.