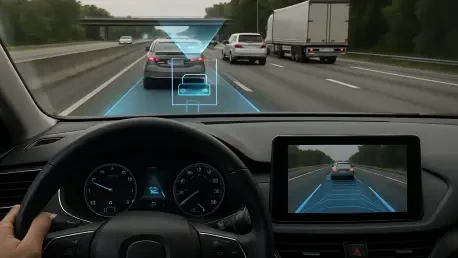

The pursuit of fully autonomous driving has shifted the engineering focus from enhancing individual sensor capabilities to building a comprehensive, interconnected technological ecosystem. While improvements in radar and LiDAR remain fundamental, they are no longer sufficient on their own to meet the stringent performance, safety, and reliability demands of the future. True advancement in advanced driver-assistance systems (ADAS) and high-level autonomy now hinges on the synergistic integration of ancillary technologies. The successful implementation of sensor fusion depends critically on a confluence of breakthroughs in computing, high-speed connectivity, precise positioning systems, and sophisticated artificial intelligence, all of which are being shaped and accelerated by rapidly evolving global regulatory standards. This integrated approach is paving the way for systems that can perceive, decide, and act with a level of awareness that far surpasses isolated, on-board hardware.

The Connectivity Ecosystem for Synergistic Perception

A foundational requirement for the next generation of sensor fusion is a robust and ubiquitous communication network that enables real-time data exchange through Vehicle-to-Everything (V2X) technology. This framework creates a dynamic, interconnected environment far beyond the confines of a single vehicle. It encompasses multiple communication links, including direct Vehicle-to-Vehicle (V2V) links to share intent and position, Vehicle-to-Infrastructure (V2I) communication with traffic signals and roadside units, Vehicle-to-Network (V2N) connections to cellular and cloud services, and even Vehicle-to-Pedestrian (V2P) systems for the safety of vulnerable road users. This intricate web is powered by technologies like Cellular V2X (C-V2X), which offers a direct, low-latency interface for immediate vehicle interactions and a network-based mode for more advanced use cases. These capabilities support complex maneuvers like vehicle platooning, remote driving, and the crucial concept of “extended sensors,” which allows a vehicle to utilize data from sensors far beyond its immediate line of sight to anticipate and react to hazards proactively.

The integration of 5G and the forthcoming 6G wireless networks serves as the critical catalyst for this highly connected ecosystem, providing the essential infrastructure to support its demanding requirements. These networks deliver the ultra-high bandwidth, minimal latency, and exceptional reliability needed to enable sophisticated, cloud-based sensor fusion applications. By facilitating the seamless and rapid sharing of enormous volumes of data between vehicle sensors, surrounding infrastructure, and other road users, 5G and 6G connectivity unlock the potential for more advanced, off-board processing. This paradigm shift allows for a more holistic and accurate real-time perception of the driving environment than any single vehicle could achieve with its on-board systems alone. This shared awareness creates a collective intelligence on the road, where vehicles can make more informed decisions based on a comprehensive understanding of traffic flow, road conditions, and potential dangers that are miles ahead, fundamentally enhancing the safety and efficiency of autonomous systems.

Intelligence and Precision at the Core

A primary challenge in advanced sensor fusion is managing the immense computational power required to process data streams from multiple high-resolution sensors simultaneously. Traditional centralized processing methods often introduce unacceptable latency for safety-critical driving decisions. Edge computing offers a compelling solution by processing data closer to its source, either within the vehicle’s own hardware or at the network edge, rather than transmitting everything to a distant cloud server. To make this approach feasible on the compute-constrained platforms found in vehicles, engineers are employing advanced techniques such as model compression and quantization, which reduce the size and complexity of machine learning models so they can run efficiently on less powerful hardware. Furthermore, event-driven processing prioritizes incoming data based on its significance, optimizing the use of limited resources. Through this decentralized and collaborative computing architecture, data fusion is accelerated to provide the real-time environmental perception, behavior decision-making, and navigation control essential for safe autonomous operation.

The integration of artificial intelligence (AI) and machine learning (ML) is a transformative force, elevating sensor fusion from simple data aggregation to a state of true environmental understanding. AI algorithms excel at identifying and interpreting complex patterns within the vast datasets generated by sensors, enabling a system to comprehend nuanced scenarios that are nearly impossible to address with traditional rule-based programming. Concurrently, machine learning provides the crucial ability for the system to learn and adapt in real time, continuously improving its decision-making as it encounters new situations. It can function effectively even when faced with uncertain or noisy sensor data, a common real-world challenge. This adaptive intelligence is securely anchored by the Global Navigation Satellite System (GNSS), which is the only on-board sensor capable of providing an accurate and absolute global position. By offering a definitive, worldwide frame of reference for the vehicle’s location, GNSS serves as a crucial foundation for all the relative data gathered by other sensors, ensuring the vehicle knows exactly where it is in the world at all times.

Driving Forces of Innovation and Adoption

The rapid advancement of these enabling technologies is not occurring in isolation; it is being significantly propelled by powerful industry and regulatory forces. The sheer complexity of modern autonomous driving systems necessitates deep and strategic partnerships between different technology providers. A prime example of this trend is the collaboration between BMW and Qualcomm Technologies. By combining powerful system-on-chips with a jointly developed AD software stack, they engineered a highly integrated system designed specifically to meet the demanding requirements for SAE Level 2+ automated driving on highways. This partnership demonstrates a clear industry shift toward co-developed, holistic solutions where hardware and software are optimized together, rather than being treated as separate components. Such collaborations are becoming essential to manage the intricate interplay between sensing, computing, and decision-making, ensuring that all parts of the system work in perfect concert to deliver the required performance and reliability.

Simultaneously, the evolution of government and industry regulations is playing a critical role in setting clear performance benchmarks and guaranteeing the safety of autonomous technologies. While the SAE Levels of Driving Automation provide a foundational framework, new and more specific regulations are emerging globally that push the boundaries of technological capability. For instance, China has enacted mandatory standards for Level 2 vehicles that specify a minimum sensor detection range and stringent resolution requirements, directly driving innovation in sensor hardware. Similarly, the United Nations Regulation No. 171 on Driver Control Assistance Systems establishes formal definitions and safety requirements for systems that assist the driver, solidifying performance expectations. As these and other regulations continue to become more stringent, the advanced technologies for connectivity, computing, and positioning will become indispensable for any automaker aiming to achieve compliance and, more importantly, build lasting public trust in autonomous vehicles.

A Unified and Intelligent Future

The next generation of sensor fusion for autonomous driving was ultimately defined by a holistic and interconnected approach. Progress was no longer measured by the isolated performance of a single sensor but by the seamless integration of a diverse suite of technologies. Powerful edge computing, ultra-reliable 5G and 6G connectivity, adaptive AI algorithms, and precise GNSS positioning were combined to create a distributed and intelligent system. This technological convergence allowed vehicles to perceive, communicate, and make decisions with unprecedented speed and accuracy. Driven by the twin forces of collaborative innovation and the necessity to meet increasingly strict safety regulations, this fusion of diverse technologies paved the way for the development of safer, more capable, and ultimately, fully autonomous transportation systems.