For years, the centralized cloud model has served as the backbone of digital transformation, but the explosive growth of data-generating devices is exposing its inherent limitations. Businesses are increasingly grappling with escalating operational costs, frustrating delays in time-sensitive applications, and a critical dependency on flawless internet connectivity that is not always guaranteed. In response to these pressures, a new paradigm is gaining significant traction. Edge computing represents a strategic shift in infrastructure, moving data processing and storage away from distant data centers and placing it closer to the physical locations where data is created. This decentralized approach does not aim to replace the cloud entirely but rather to augment it, fostering a more balanced and efficient hybrid ecosystem where immediate, time-critical tasks are handled locally while the cloud remains the hub for large-scale analytics and long-term data archival.

The Upside Why Businesses Are Turning to the Edge

One of the most compelling arguments for adopting edge computing is its direct impact on both the bottom line and operational performance. By processing data locally at or near its source, organizations can dramatically curtail the volume of information that must be transmitted to a centralized cloud. This reduction in data traffic translates directly into substantial savings on monthly cloud service bills, a particularly attractive proposition for businesses that generate massive datasets. At the same time, this proximity of processing power to the data source virtually eliminates the latency associated with sending data to a remote server and awaiting a response. For applications where milliseconds matter, such as IoT sensors monitoring industrial machinery for predictive maintenance or autonomous vehicles making split-second navigational decisions, this near-instantaneous feedback is not merely an improvement but an absolute necessity for safe and effective operation.

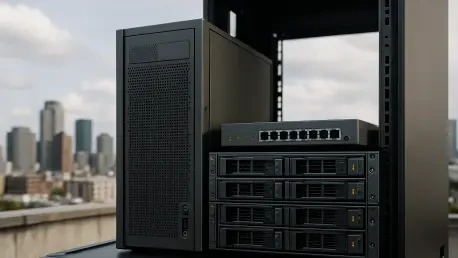

Beyond the immediate benefits of speed and cost savings, edge computing introduces a crucial layer of operational resilience and deployment flexibility that is unattainable in a purely cloud-dependent model. Because edge devices can continue to process data and execute core functions even with intermittent or nonexistent internet connectivity, businesses can maintain continuity during network outages. This capability is a game-changer for industries operating in remote locations, like mining or agriculture, and provides essential reliability for any enterprise where constant uptime is critical, such as retail point-of-sale systems. Moreover, the evolution of edge hardware has produced increasingly compact, lightweight, and powerful devices. This advancement allows for the deployment of high-performance computing in non-traditional environments, opening up new frontiers for mobile operations and sophisticated fieldwork that were previously impractical.

The Hurdles Navigating the Challenges of Edge Computing

However, the transition to a decentralized architecture introduces a new and far more complex security landscape that organizations must be prepared to navigate. In a traditional centralized cloud environment, security efforts can be concentrated on fortifying a single, well-defined digital perimeter. Edge computing fundamentally dissolves this perimeter, transforming every connected device—potentially numbering in the hundreds or thousands—into an individual, potential entry point for malicious actors. This massively expanded attack surface makes securing the network exponentially more challenging. A single compromised device could be exploited to inject false data into the system, disrupting operations, or used as a backdoor to gain access to the core corporate network, posing a significant threat to the entire organization’s data integrity and security.

The practical considerations of cost and data governance also present considerable hurdles that must be carefully weighed. While edge computing can significantly lower ongoing operational expenses related to cloud usage, it often requires a substantial upfront capital expenditure for purchasing and deploying capable hardware at each edge location. Beyond this initial investment, organizations face the ongoing complexities and costs associated with managing, maintaining, patching, and updating a widely distributed fleet of devices, a task that can be far more resource-intensive than managing a centralized infrastructure. Furthermore, if not governed by a clear data strategy, local processing can inadvertently create data silos. When valuable information is analyzed and stored exclusively on individual devices without being aggregated centrally, it can lead to a fragmented and incomplete understanding of overall operations, hindering large-scale analytics and holistic strategic decision-making.

A Framework for Strategic Decision Making

To determine if an edge computing strategy aligns with organizational goals, leaders must begin by critically evaluating their operational requirements. The first question to address is how vital real-time data processing is to the business model and its competitive standing. If the ability to generate and act on instantaneous insights provides a distinct market advantage, the ultra-low latency offered by an edge architecture presents a compelling case for adoption. Following this, a thorough assessment of the operating environment is necessary. Does the business function in locations where internet connectivity is unreliable, slow, or prohibitively expensive? For any scenario where network stability is a vulnerability, the capacity of edge systems to function offline provides an essential layer of business continuity that a cloud-only model simply cannot deliver, transforming a critical weakness into a manageable operational variable.

Ultimately, the decision came down to a careful weighing of strategic trade-offs. A successful implementation hinged on a frank assessment of financial and technical readiness, determining if the organization possessed the capital for the initial hardware investment and the internal expertise to manage a distributed infrastructure. A robust and comprehensive cybersecurity plan was not merely a recommendation but a non-negotiable prerequisite, designed to secure every endpoint across an expanded attack surface. Finally, the viability of the investment rested on a long-term vision for scalability, ensuring that the architecture could grow with the company without becoming unmanageably complex. The analysis showed that for businesses where localized processing, speed, and reliability were paramount, edge computing offered a powerful solution, but only when implemented with a clear-eyed strategy for managing its inherent complexities.